Once upon a time, there was a tech conference and a grand plan to have it properly recorded and published for others to enjoy. And then it all went haywire.

Every year in Makimo, we hold a company tech conference called CodeBetter. It’s an open space for everyone to share their experiences and ideas in front of an audience. It’s also a great way to get together and discuss what we’ve all achieved over the past year — contrary to the original name, all topics and areas are welcome, not just programming-related.

We did our first impromptu recordings of all the talks in 2021, and this year I thought: hey, let’s do it Better™ this time. As you can imagine from the title and the opening paragraph, it didn’t go according to plan. Let’s salvage some worthwhile takeaways from this, shall we?

The original 2021 setup and its shortcomings

When we first recorded the CodeBetter conference in 2021, it was mostly a welcome after-thought: we primarily wanted a workable stream. It was the first CodeBetter in the pandemic-stricken world, with many team members working remotely and unwilling to attend a live event. An obvious choice, but also one posing technical hurdles.

We wanted to find a way to hold a live event for those who were at the office at that time while streaming the talks for others. Ironically, with everyone working remotely and no live audience to consider, it would actually have been much easier.

Back then, we had neither hardware nor experience for such a setup. We used our Blue Yeti microphone on a boom stand to capture the speaker and the audience without constraining the speakers’ movement. For video, we relied on a wide-angle GoPro connected as a webcam.

Instead of doing an unfamiliar streaming setup with OBS or other broadcasting software, we relied on a proven conferencing tool: Google Meet. A meeting was set up for everyone working remotely to join, watch, and listen. A sidecar laptop joined the meeting with the microphone and GoPro connected. Each speaker joined the call when it was their turn and shared the slides directly from their laptops. As a bonus feature, remote teams could easily participate in a Q&A session after each talk.

Having that, we used the built-in Meet recording feature to capture each talk to a video file — recordings automatically showed the speaker’s face on the right & the shared presentation as the hero. We then slapped a graphic overlay on top in the post et voilà.

Note: You can watch last year’s videos here.

Nothing was particularly wrong with this setup. It served its purpose well and brought no surprises. It did, however, have apparent shortcomings: the audio wasn’t great, with lots of echo and room noise. The camera quality was poor, and it didn’t allow for speaker closeups. The visual layout of the recording was not customizable, and the speaker tile was tiny. Last but not least: Google Meet records a heavily compressed 720p video, a far cry from what YouTube teaches us to expect.

I knew I wanted to do better next time around.

The plan

Knowing the date of the conference early, I knew this time we would need to prepare in advance if I wanted to achieve what I had envisioned.

The plan was to have clear audio for the speaker and a separate one for the audience to ask questions. We’ve all seen those talks where the question from the audience can’t be heard at all, and the speaker won’t repeat it, right? I wanted to have two separate camera angles: one for the speaker closeup and a wide-angle room view for the introductions and freedom of movement. With that in mind, I envisioned different visual layouts for the speaker and slides combo as well as just the full-screen speaker view. Finally, I intentionally wanted to record it in 1080p with high compression quality. As I write these words, these still feel like pretty reasonable expectations.

A trait of mine that often comes in handy but equally often gives me headaches is to find ways to utilize the tools we already have at our disposal to their full extent, sometimes in unorthodox ways, before even considering buying additional stuff. In this instance, the coin flipped headache.

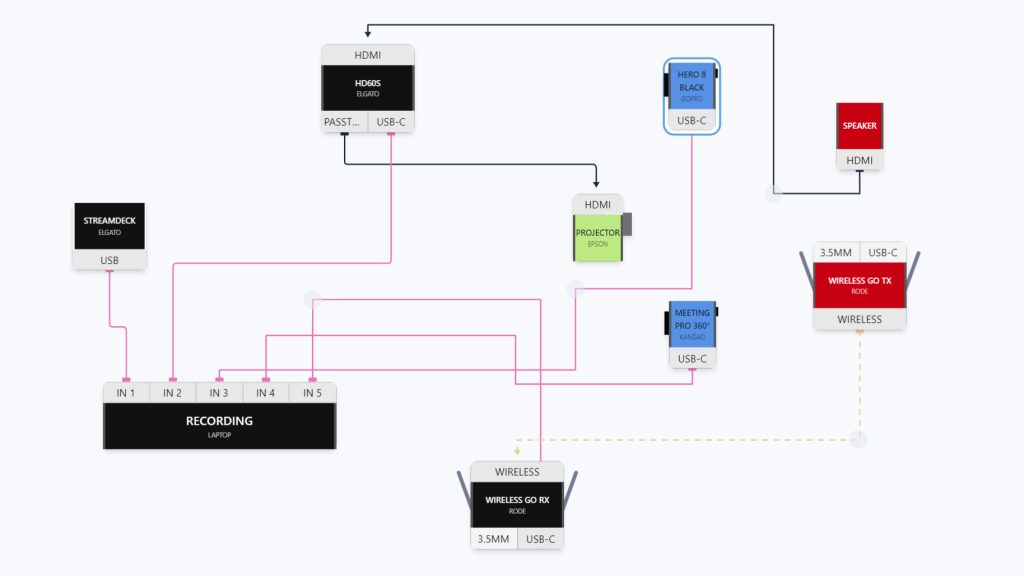

To fix the echoey audio, we bought a set of Røde Wireless Go 2 with external lavalier microphones. A device I can’t recommend highly enough — it simply does its job well and is really convenient. To overcome the visual layout & recording quality limitations, OBS seemed like an obvious choice. I could prepare different scene layouts, connect all video & audio inputs I needed, and record with the click of a button.

For video, I knew we had a few DSLRs laying around with a variety of high-quality lenses. This must trump a measly GoPro, right? Or so I thought, and, without giving it too much thought, went ahead to purchase HDMI capture cards to get the video from these cameras into the OBS setup as well as to seamlessly capture the presentation screen before it hits the projector from the speakers’ laptops.

With that and a good dose of excitement, I started connecting everything, prototyping, and testing.

What went wrong and how we pivoted

It quickly turned out that my DSLR cameras & capture cards contraption sounded better in theory than in practice. And not because it was a bad idea in general, but rather because I didn’t anticipate issues I should’ve double-checked beforehand.

What I didn’t mention earlier is that those DSLRs were not exactly fresh from the store. I knew they could record 1080p video and simply took the rest for granted, but learned it’s not enough the hard way. One of the cameras had no clean HDMI output at all. Another didn’t output full 1080p through HDMI, and… overheated quickly. Both could be used as USB webcams through the manufacturers’ drivers but with limited resolution and limited runtime. Bummer! That idea went through the window quickly. I should have known better.

Grinding my teeth, I said sorry to the GoPro and used it for the wide-angle. I also connected a 360° webcam with a face-following feature as the main camera, which was an unexpected but nice addition to the setup. Sadly, any wished-for improvement in video quality now simply wasn’t there.

Everything else, however, worked as expected. The audio from lavalier microphones was clear and clean. Capturing the slides with an Elgato card worked like a breeze, putting no strain on the speaker to set up anything. The video, albeit not very crisp, was reliable and palatable. Last but not least, OBS recorded good quality clips, and scene switching was smooth and seamless.

I tested that repeatedly with different computers to make sure we wouldn’t run into problems with HDMI outputs from different computers for the slides and to pinpoint possible issues with audio recording in case someone has a long beard or just speaks much louder than the others.

Happy with the consistent outcome, I connected the maze of cables the day before the event, made sure everything was charged, and went home.

The day of the event

The next day, we all gathered in the venue and did some last preliminary checks. The mics worked, the cameras worked, and capturing the slides was fine too. So far, so good.

The welcoming speech felt like a good last trial that we’re in the clear. After recording that part, I listened to the recording at multiple points to see if there weren’t any glitches or other issues. Aside from the subpar sharpness of the GoPro, it was pristine. We were all in for a treat with so many solid talks ahead.

Fully reassured, I hit record for the first speaker and sat back to enjoy the talk. And then, GoPro crashed and disconnected randomly. Murphy’s laws at their finest, I thought, jumping to the second camera angle for the rest of the talk. Then, I reconnected the GoPro and continued.

Smooth sailing continued for the next talk or two, but then I noticed a frozen frame on the slides capture. The speaker was already ahead, but the video still recorded the welcome slide. Changing the input resolution back and forth helped, but afterwards I safeguarded myself from further issues by performing this ritual before every talk.

The rest of the day, from the recording perspective, was uneventful. I ended up with cleanly cut files for each talk, almost ready to be published with little post-processing needed, and went to enjoy the time with the team at the conference after-party.

We’ll have all the recordings published tomorrow, I said…

The post-conference fallout

…but that never happened.

As soon as I started working on the recordings the next day, something felt wrong. Really wrong. The opening speech was recorded perfectly fine. The first one seemed fine, too. There was the GoPro disconnection glitch, but I could live with that. But then I played the second one. The audio recording was all over the place. Crackling, screeching, unintelligible. I went back to check the earlier recording, and it turned out to be broken too — just after some time. The first half was mostly okay, but then the stutters started.

I opted for recording multiple audio tracks separately in the OBS, from the lavalier mics, the room microphone, and even the GoPro. Sadly, none were usable, and I had no clue why. We had tested the setup extensively to ensure this wouldn’t happen, right?

I went through the recordings one by one to check if all were lost to this issue. And yet, no! The fourth one was perfectly fine, top to bottom. And the fifth one, once again, not. Why on Earth? I checked the conference schedule and found the fourth talk was just after a long break.

That gave me an idea.

I replicated a similar setup and hit record, just like we did during our testing. However, that time I left it running for a full hour and not twenty minutes.

The problem revealed itself in full glory after recording for long enough. The laptop’s CPU overheated and throttled. With limited computing power, OBS started dropping frames below the expected framerate, which led to a broken audio stream.

And that was it. A very simple explanation to a very unfortunate and, at this point, irrecoverable failure.

The takeaways

In the history of software engineering, many major outages boiled down to straightforward explanations and, often, user errors. Think about how a typo took down AWS S3, the backbone of the internet, or how the massive GitLab’s data loss happened in 2017.

While the story of my botched recordings is feeble on its own, it touches on many of the same areas as more severe incidents we encounter in our daily jobs as engineers. It’s deceptively easy to approach seemingly simple problems with little thought, but even the smallest efforts deserve proper engineering.

I distilled 7 takeaways from this encounter that show how the same principles can be applied to avoid or remedy similar blunders, regardless of the scale.

Learn by doing and enjoy the process

While knowing the theory well and understanding how to approach a problem is vital, no amount of reading trumps actual experimentation and prototyping. You can swiftly identify bottlenecks, pain points, limitations, and methods to overcome encountered problems by doing so.

Just like a pianist doesn’t just go from concert stage to concert stage, always perfectly prepared by just reading the sheet music, you can benefit from all forms of practice and experimentation in smaller environments, pet projects, or quick & dirty prototypes. Making mistakes during learning can actually be fun, so enjoy the process and build confidence for bigger things.

If I had tested all the individual components one by one, I would have quickly noticed the DSLRs wouldn’t get me anywhere.

Fail fast and learn from mistakes

Sometimes encountering a failure is inevitable, but the sooner you know about it, the better. Run your efforts quickly and try your ideas in practice as soon as you can. Rather than layering a grandiose vision and waiting for the showtime, start small, prototype, and — most importantly — execute.

When something isn’t working, quickly change course and learn from mistakes instead of continuing down the wrong path.

If I had tried the same recording scenario for a smaller event and a limited audience, I would have encountered the same issue without consequences.

Overengineering can be a recipe for disaster

Small but meticulously executed trumps big and sloppy. As engineers, we often design for conditions that won’t happen, traffic that won’t be there, and features that will never be implemented. Knowing where to strike the right balance between simplicity and future-proofing is a sign of mastery in a given field.

Ultimately, it’s often easier and better to refactor the solution when it shows strain than to design for the (unknown) future and cope with an overcomplicated system daily.

If I hadn’t tried all the things at once, with multiple audio tracks, multiple camera angles, capture cards, and whatnot, but had decided on a simpler but perfectly satisfactory setup, the recordings would have been fine.

Production environments always hold surprises

It seems obvious and intuitive that test environments should resemble production environments to ensure we are testing the right thing. No one expects that if the database copes perfectly with 100 test rows, it will behave exactly the same with millions of them. Getting them as close together as viable can make that gap smaller but never really closes it.

Production environments always hold surprises. The traffic patterns are never exactly the same, the data is never exactly the same, the infrastructure often differs even so slightly. Being ready to encounter that and act upon it can help avoid many headaches. Using practices like continuous delivery and canary deployments can help prevent substantial disruptions.

If I hadn’t assumed that my test results would carry on to the day of the event, I would have been more vigilant and alert for any issues.

Monitor and don’t assume things won’t break silently

Testing a solution at a given point of time with success doesn’t guarantee it stays this way. The environment in which software works often fluctuates over time, impacting the running system in multiple ways. Utilize continuous monitoring tools and choose informative metrics that will help monitor the solution’s health.

Ideally, early warning signs can trickle to the monitoring metrics, showing slight deviation from the norm, and giving time to diagnose & remedy issues before they escalate and surprise everyone with a bang.

If I hadn’t taken the welcome speech recording quality for granted and monitored the output frame rate or, better yet, output audio, I would have noticed the issue during the first talk and not the next day.

Consider contingency & fail-over strategies

You can’t predict everything and be resilient to everything, but you can analyze, anticipate, and design for typical failures. Understanding and writing down what can fail and in which circumstances can help design the solution in either a resilient fashion or with a clear back-up strategy when that happens.

Ideally, high availability could be implemented by design, with no single point of failure and automatic fail-overs so that issues won’t ever be visible to the end users. Practically, in many situations, a checklist or a clear plan of what to do when certain problems happen can help contain those issues calmly and swiftly.

If I had anticipated that cameras could disconnect randomly, I would have prepared fail-over scenes, e.g., with just slides, to keep the recording going.

Know your tools well

Make sure you don’t just use the tools but know them well and understand their features, limitations, and quirks. By doing so, you can utilize them to their fullest potential and avoid struggling and hitting the walls.

The best solutions are often the simplest, and it’s easy to overcomplicate things unnecessarily. As a very imaginative example, check out how Command-line Tools can be 235x Faster than your Hadoop Cluster in the article by Adam Drake.

If I had known that the Røde Wireless Go 2 could record audio on the internal memory when being used as a standalone microphone, I would have been able to salvage the recordings with backup audio tracks.

Summary

Failures are inevitable and will happen to all of us in many circumstances. Some will be minor and negligible, like a road bump; others will be tough to handle and can have far-reaching ripple effects. All can benefit from the same principle, though: doing a proper, detailed post-mortem analysis of what happened.

It’s not without reason that the FAA and EASA prepare complex, detailed reports after even the smallest aviation incidents.

By investigating what happened and why, you can, among others:

- Grow your analytical thinking and investigative skills

- Deepen your understanding of all the tools & environment

- Avoid similar mistakes in the future

- Document your findings for other team members and help them avoid such mistakes too

- Sharpen your senses to notice warning signs earlier and react accordingly

All these things make us better engineers, as opposed to mindlessly acknowledging mistakes and moving on. Don’t get discouraged by failures. Get your hands dirty with new ideas that also might be susceptible to failure. Or, better yet, lead to a dazzling success!

And if you need a team that isn’t afraid of hurdles and learns from them…

Let’s talk!CTO @ Makimo. Software engineer by heart, who learns and adapts quickly and like making impossible things possible. Technical writer for DigitalOcean and technical editor for Helion. After work, a wine writer and an amateur pianist.